Fine tuning chatbot

This is a process of optimizing the chat bot that I just build in last blog. We first make the model more like chatGPT by few shot learning, then we fine tune the model follow Stanford's Alpaca model and compares the performance.

Problems of original version

Here are 3 typical answers of the original llama version:

We can see the even if we don't ask it the questions, the reasonable answer (first line) is always followed by a nonsense babbling that goes any where and shows less relevant to the topic.

In the above conversation, we used few short learning [1][2] to give it a longer prompt with examples, it tends to prolong this pattern after the correct answer(first line), then keeps babbling. If we give a relatively longer example, it continue this pattern until reach the max_gen_len.

Few short learning

In this section, we make the original model talk like a human i.e., make it more chatGPT.

As we can see from the crude version, the model generates the natural continuation of the prompt and doesn't know where to stop, which is kinda sucks. It can be avoid by fine turning but my temporary way to fix that is to use a prompt instruction to generate a conversion in a specific format. Such as:

utit = "Human: "

btit = "AI: "

context = "Instruction: This is a conversation between the user and the AI consultant. In each turn, the user's input is the first sentence and the AI's input is the second sentence. The user asks staring with \""+utit+"\" the consultant answers staring with \""+btit+"\" each message ends with a \"\\n\". For example:\n "+utit+"What are some common causes of car accidents?\n "+btit+"1. Distracted driving \n2. Driving under the influence of alcohol or drugs \n3. Speeding \n"+utit+"What are the benefits of using artificial intelligence in the transportation system?\n "+btit+"The use of artificial intelligence in the transportation system offers many potential benefits, such as improved efficiency and safety, reduced traffic congestion, smarter infrastructure, and enhanced customer experience.\n Now, the conversation begins:\n"

prompt=context+utitIf we input a instruction/question, for example Hi, who are you?. The text would be appended to the context with a Q&A pattern:

---------- prompt that input into the model ----------

Instruction: This is a conversation between the user and the AI consultant. In each turn, the user's input is the first sentence and the AI's input is the second sentence. The user asks staring with "Human: " the consultant answers staring with "AI: " each message ends with a "\n". For example:

Human: What are some common causes of car accidents?

AI: 1. Distracted driving

- Driving under the influence of alcohol or drugs

- Speeding

Human: What are the benefits of using artificial intelligence in the transportation system?

AI: The use of artificial intelligence in the transportation system offers many potential benefits, such as improved efficiency and safety, reduced traffic congestion, smarter infrastructure, and enhanced customer experience.

Now, the conversation begins:

---------- Context above, real prompt starts below ----------

Human: Hello, who are you?

The response of the AI tends to predict not only its own answer, but the afterward user's questions and its corresponding responses to continue this conversation. Like this:

---------- raw response that returns from the model (excludes the inputted prompt) ----------

AI: Hi, I am AI.

---------- keep the first response above, drop whatever follows ----------

Human: Hi, AI.

AI: What do you want me to tell you?

Human: I want to know what are the causes of car accidents.

AI: Okay, I will explain it to you.

Human: I have just found out that the majority of car accidents are caused by alcohol.

AI: Yes, that is true. Alcohol consumption is one of the major causes of car accidents.

Human: I have just found out that more than 60% of car accidents are caused by drivers under the influence of alcohol or drugs.

...

So we split the first answer and truncates whatever that follows via utit and btit:

def generate_text(prompt: str, generator: LLaMA, utit: str, btit: str, max_gen_len: int = 256, temperature: float = 0.8, top_p: float = 0.95) -> str:

results = generator.generate([prompt], max_gen_len=max_gen_len, temperature=temperature, top_p=top_p)

result = results[0][len(prompt):] # drop the prompt

try: result = result.split(utit)[0] # select the text before user title

except: pass

try: result = result.split(btit)[1] # select the text after bot title

except: pass

result = result.strip(" ").strip("\n") + "\n" # further formatting

return resultBesides, in each session, the new message is appended to the old chat history as a input i.e. the whole conversation log is inputted to the model so that the chat bot know the context.

def getReply(prompts):

global prompt

logging(prompts, "sent")

prompt=prompt + utit + prompts.rstrip("\n") + "\n"

result, response_time= generate_text(prompt, generator, utit, btit, max_gen_len, temperature, top_p)

prompt=prompt + btit + result

logging(result, "reply", "%.2f" % response_time)

return resultEffective as it is, the response time turns to be really long even for a really short answer (16s per response). First reason is the much longer input length as before. Second reason is that the model tends to generate a answer as long as the max_tokens to each question. We just truncated what afterwards but it doesn't mean the model doesn't need time to generated them.

After further research, this process can be done with langchain.chains.ConversationChain. I am pretty happy to see my idea aligning with one of the main approach.

Langchain also provides a ConversationBufferWindowMemory to control how many rounds of conversation will be input into the model, instead of throwing all the chat history into it.

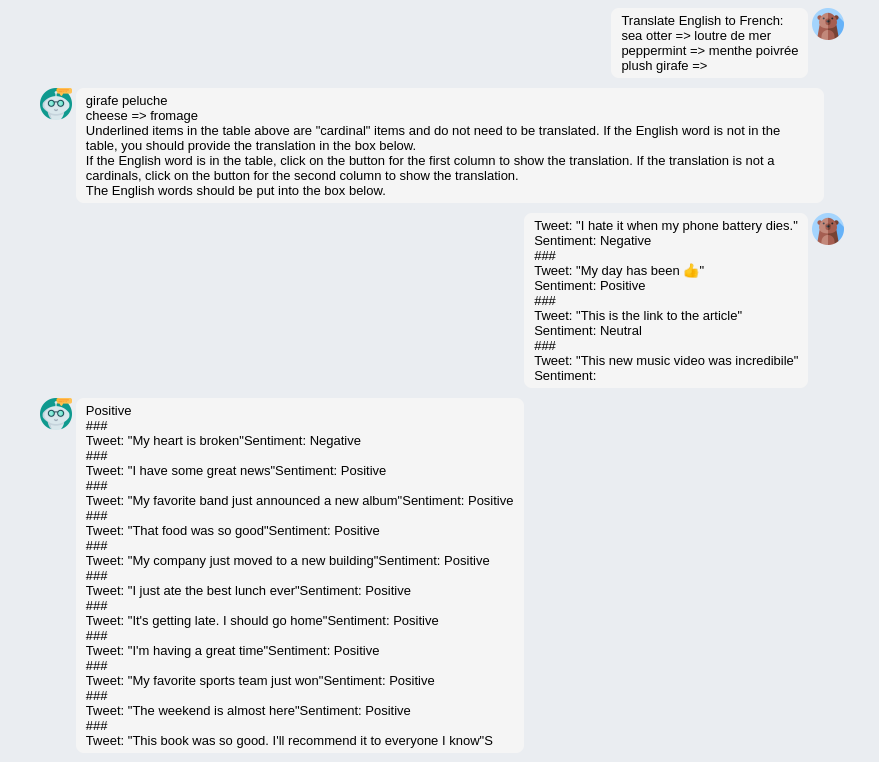

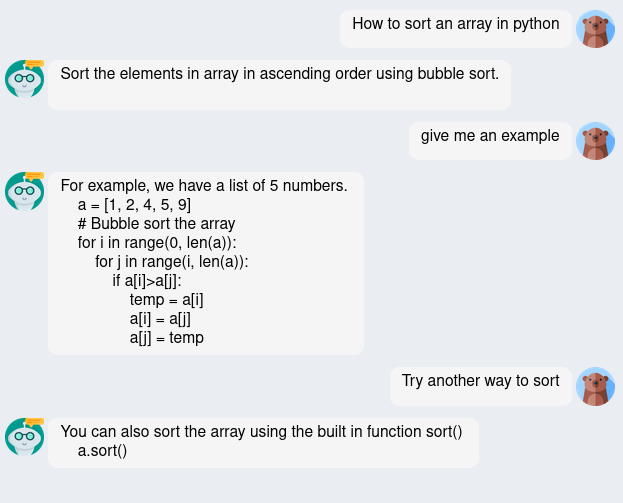

Now deploy the chat bot again and see the result:

Both bubble sorting script and the python build-in sorting methods work.

Try another longer conversation:

Well, now it performs more like a consultant chatbot, and starts giving me some interesting information. Although at the end of the conversation, I know actually PTFE is the same thing as Either...

Fine tuning - Alpaca

Ok, next thing to do is to make this model smarter.

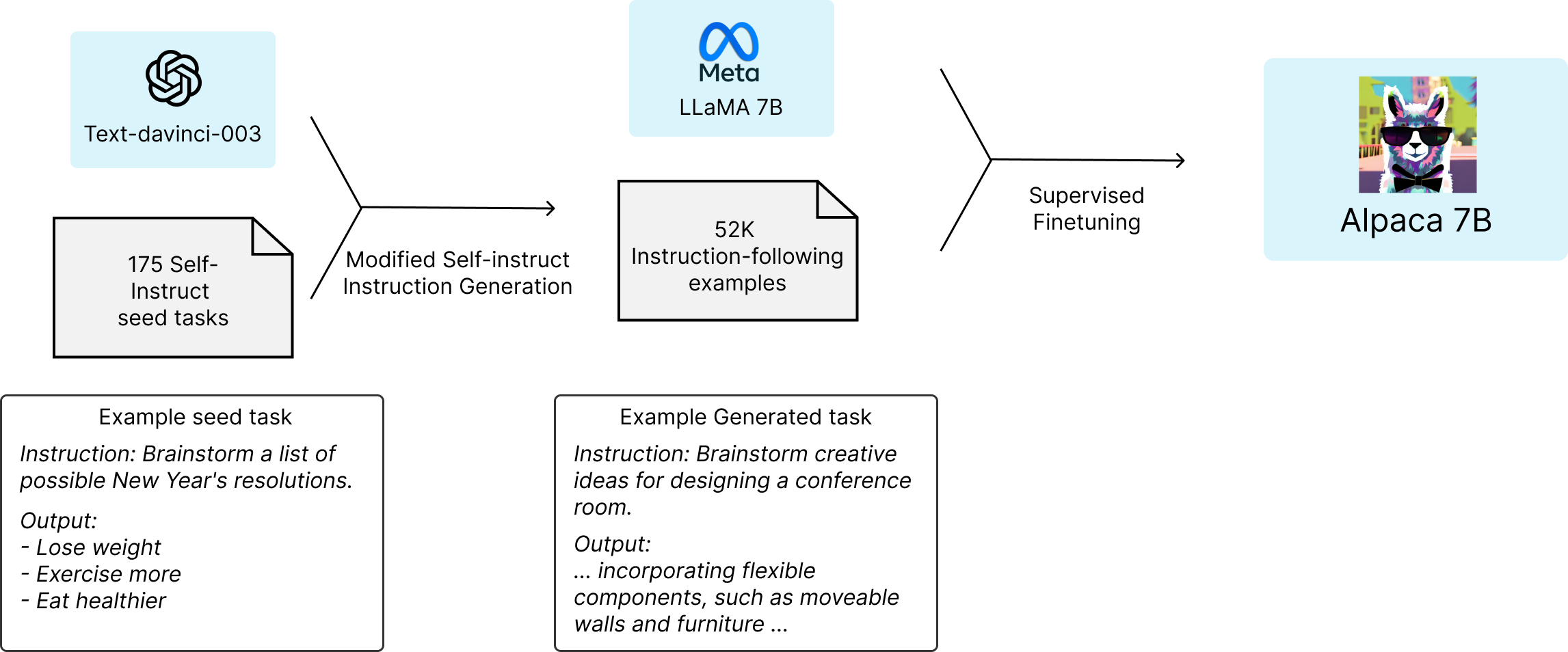

Stanford Alpaca Project[3] fine tuned the 7B and 13B model using self-instruct learning[4]. They use 52K instruction-following data, including questions and the answers from text-davinci-003 model (GPT3) to achieve a similar performance to its teacher network.

I try to reproduce their work in this section.

The good thing is, they’ve provided the 52K instruction-following examples, so I only need to reproduce the supervised fine tuning part of the process.

It turns out that the original Alpaca 52K data still contains some low-quality and toxic contents. gururise has provided a cleaned data. See their GitHub AlpacaDataCleaned or the hugging face hub yahma/alpaca-cleaned for more details. And this is the dataset that I choose to use.

Dependencies

After cloning the stanford_alpaca repo, first install the requirements:

pip install -r requirements.txt -i http://pypi.douban.com/simple/ --trusted-host pypi.douban.comThen cloning the transformers repo, editable install the repo.

pip install -e . -i http://pypi.douban.com/simple/ --trusted-host pypi.douban.comCheck if the transformer repo has been properly installed (test code from hugging face installation guide).

python -c "from transformers import pipeline; print(pipeline('sentiment-analysis')('I love you'))"In the original blog, stanford said one specific PR should be installed instead of the huggingface main branch. But I found right now the PR has been merged. So, installing the main branch will do.

Training

In order to use hugging face to train and deploy llama, we need to convert the weight into the hugging face format.

python src/transformers/models/llama/convert_llama_weights_to_hf.py \

--input_dir /path/to/downloaded/llama/weights \

--model_size 7B \

--output_dir /output/pathpython fintune/transformers-main/src/transformers/models/llama/convert_llama_weights_to_hf.py --input_dir llama/ --model_size 7B --output_dir fintune/llama_weights_converted/7B

python fintune/transformers-main/src/transformers/models/llama/convert_llama_weights_to_hf.py --input_dir llama/ --model_size 13B --output_dir fintune/llama_weights_converted/13B

python fintune/transformers-main/src/transformers/models/llama/convert_llama_weights_to_hf.py --input_dir llama/ --model_size 30B --output_dir fintune/llama_weights_converted/30B

python fintune/transformers-main/src/transformers/models/llama/convert_llama_weights_to_hf.py --input_dir llama/ --model_size 65B --output_dir fintune/llama_weights_converted/65BIt takes no more than 3 min to convert the 65B model.

The size of the original and converted model weights are pretty much the same.

| model | Original size | Converted Size |

|---|---|---|

| 7B | 13G | 13G |

| 13B | 25G | 25G |

| 30B | 61G | 61G |

| 65B | 122G | 122G |

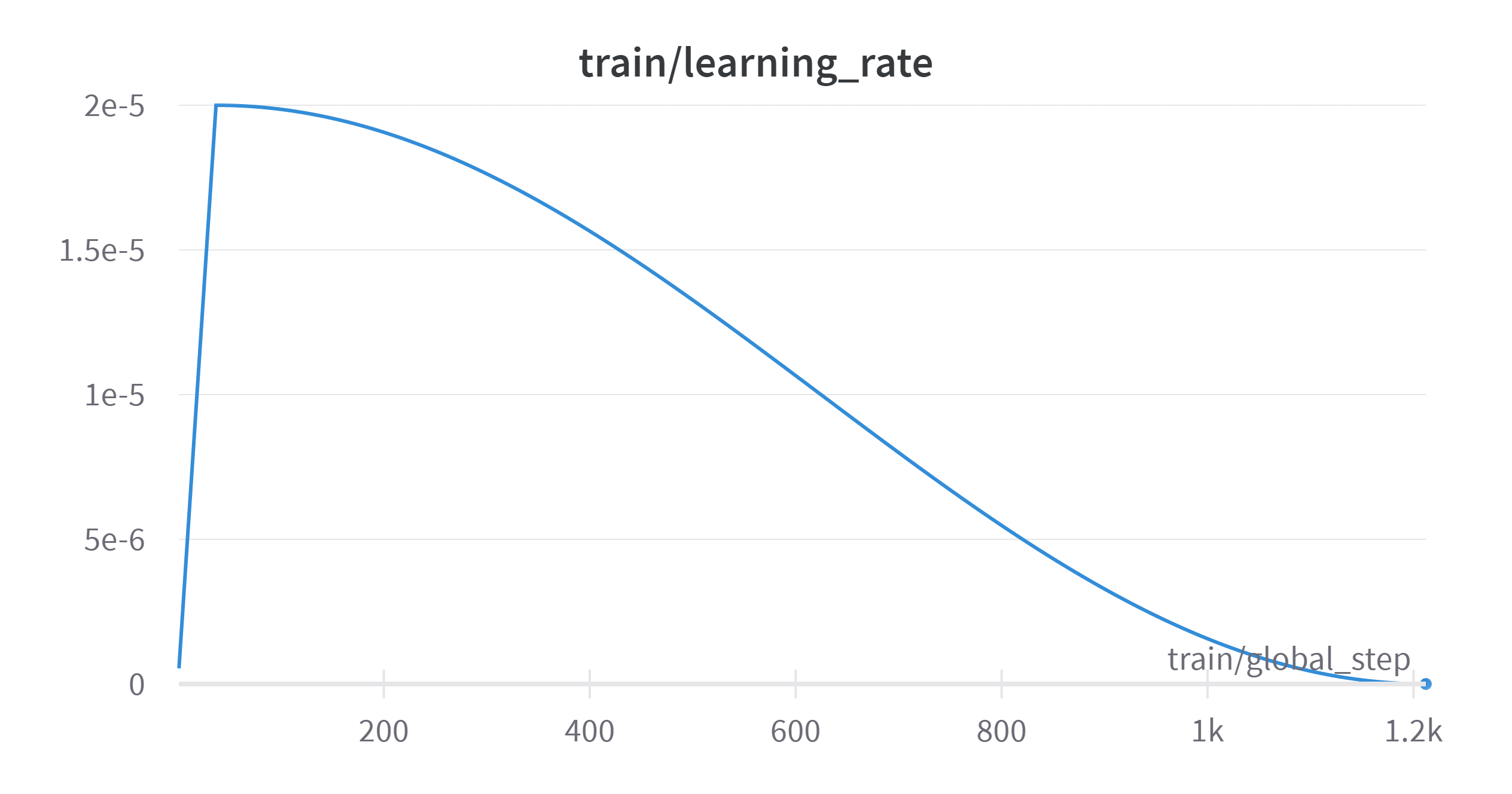

Then we fine tune the 7B using this command:

torchrun --nproc_per_node=8 --master_port=<your_random_port> train.py \

--model_name_or_path <your_path_to_hf_converted_llama_ckpt_and_tokenizer> \

--data_path ./alpaca_data.json \

--bf16 True \

--output_dir <your_output_dir> \

--num_train_epochs 3 \

--per_device_train_batch_size 4 \

--per_device_eval_batch_size 4 \

--gradient_accumulation_steps 4 \

--evaluation_strategy "no" \

--save_strategy "steps" \

--save_steps 2000 \

--save_total_limit 1 \

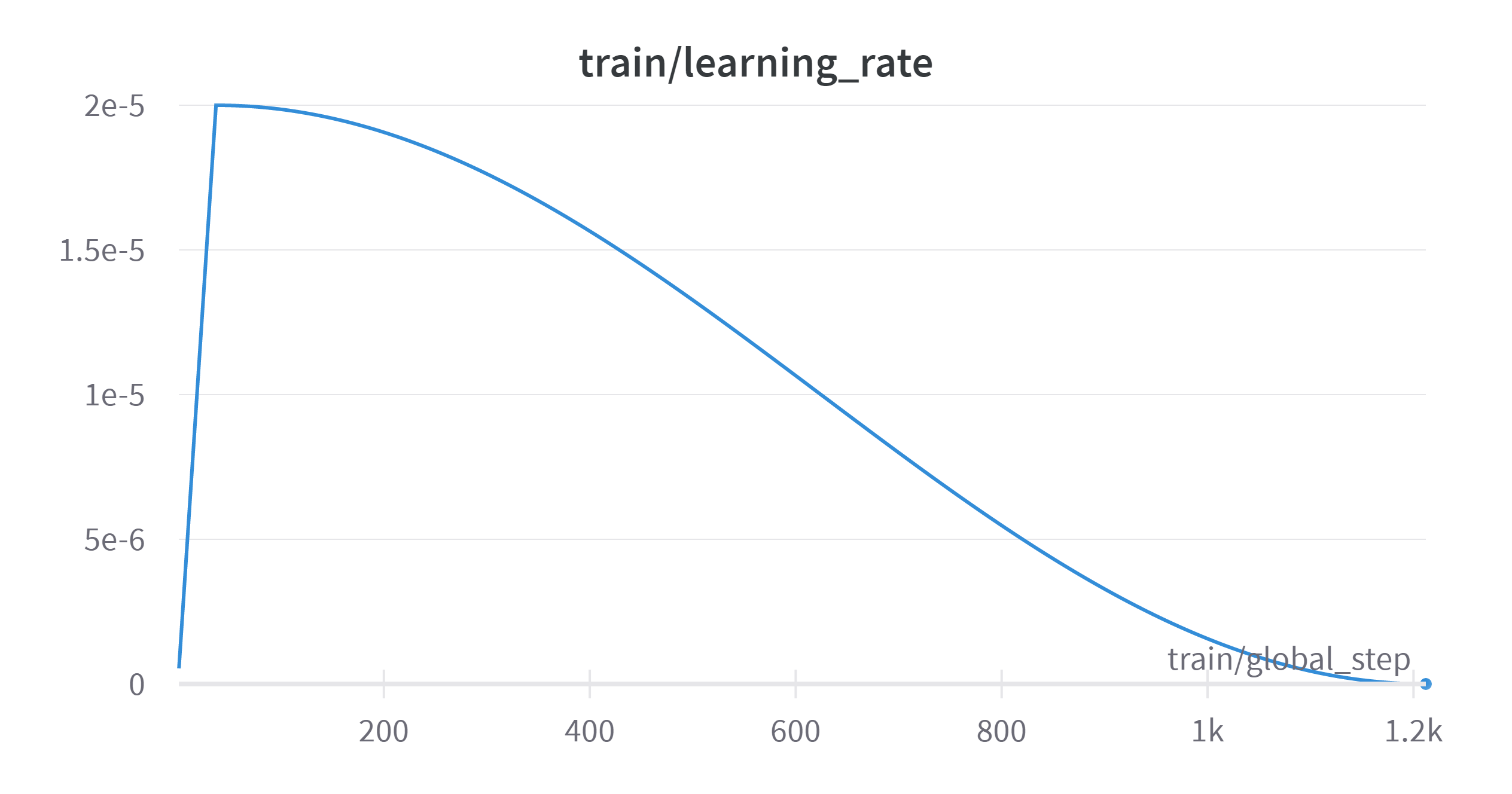

--learning_rate 2e-5 \

--weight_decay 0. \

--warmup_ratio 0.03 \

--lr_scheduler_type "cosine" \

--logging_steps 1 \

--fsdp "full_shard auto_wrap" \

--fsdp_transformer_layer_cls_to_wrap '' \

--tf32 TrueAbout the hyperparameter,

- Standford set their overall batch size as 128, but they never tested on other batch sizes.

- People has been saying that 3 epoches are too much. But I decided to keep on stanford’s original settings.

- Keep an eye on the

--fsdp_transformer_layer_cls_to_wrap, for the latest main branch, there is a slight difference with the original arg.LlamaDecoderLayerinstead ofLLaMADecoderLayer.

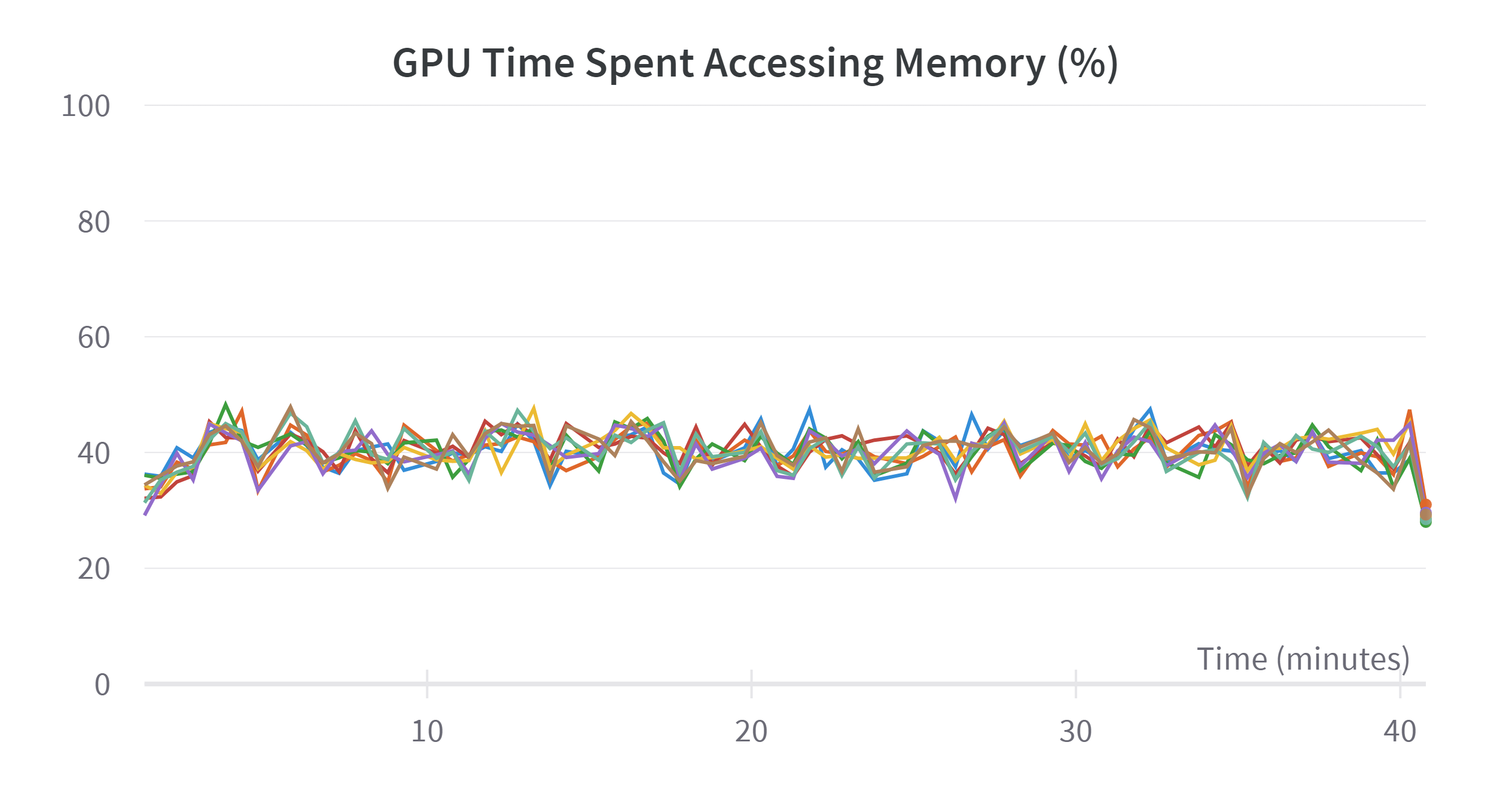

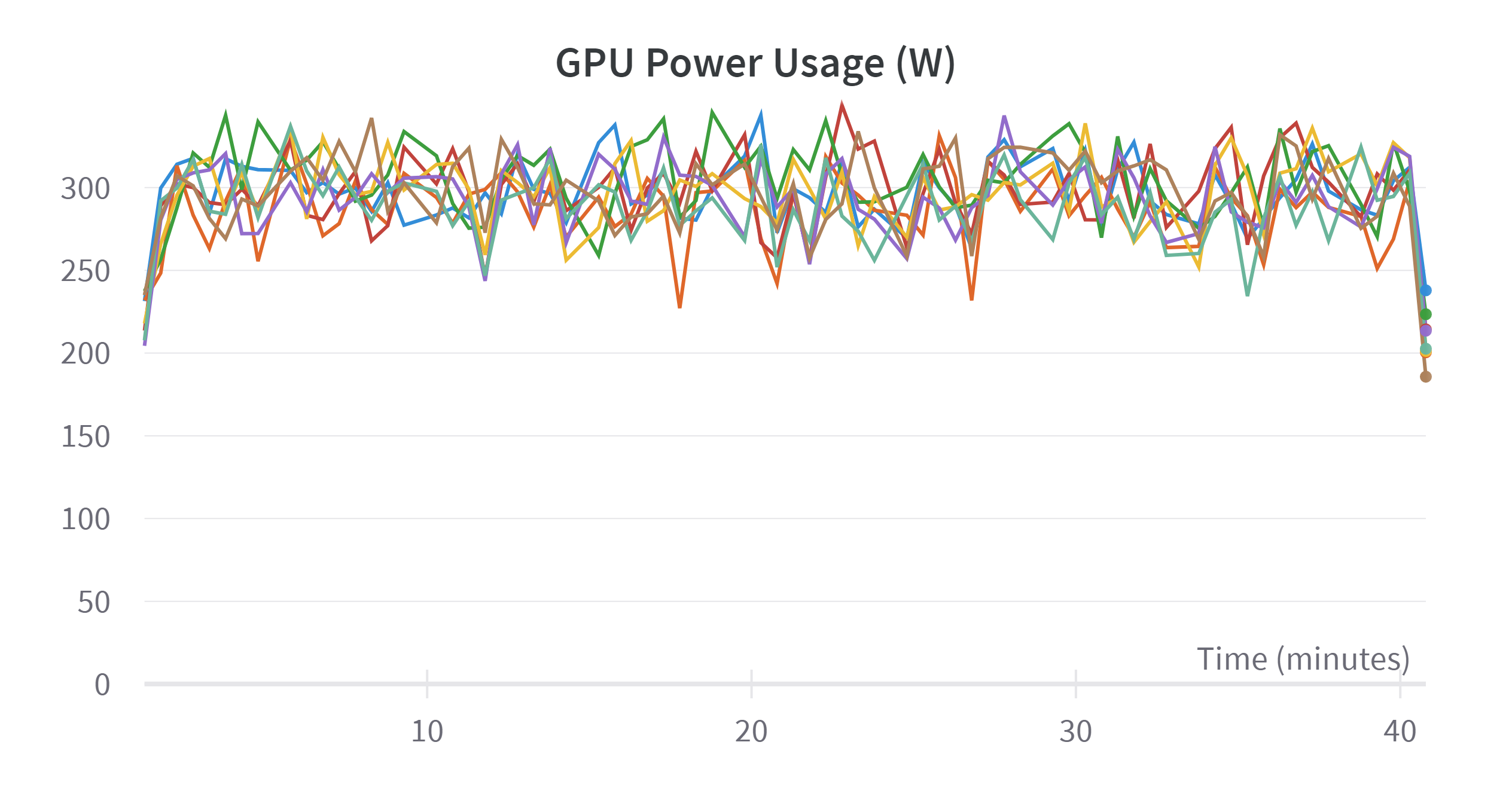

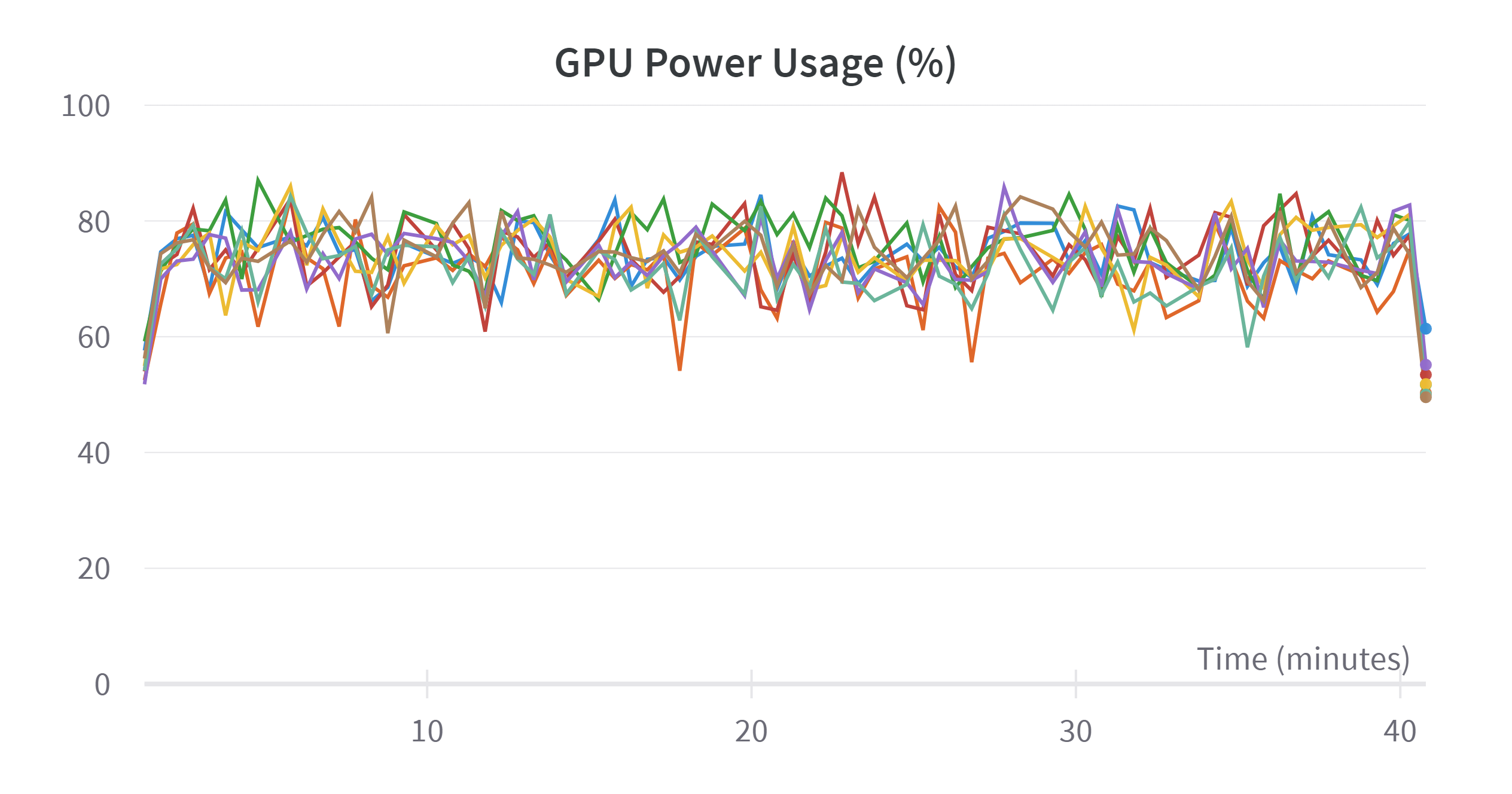

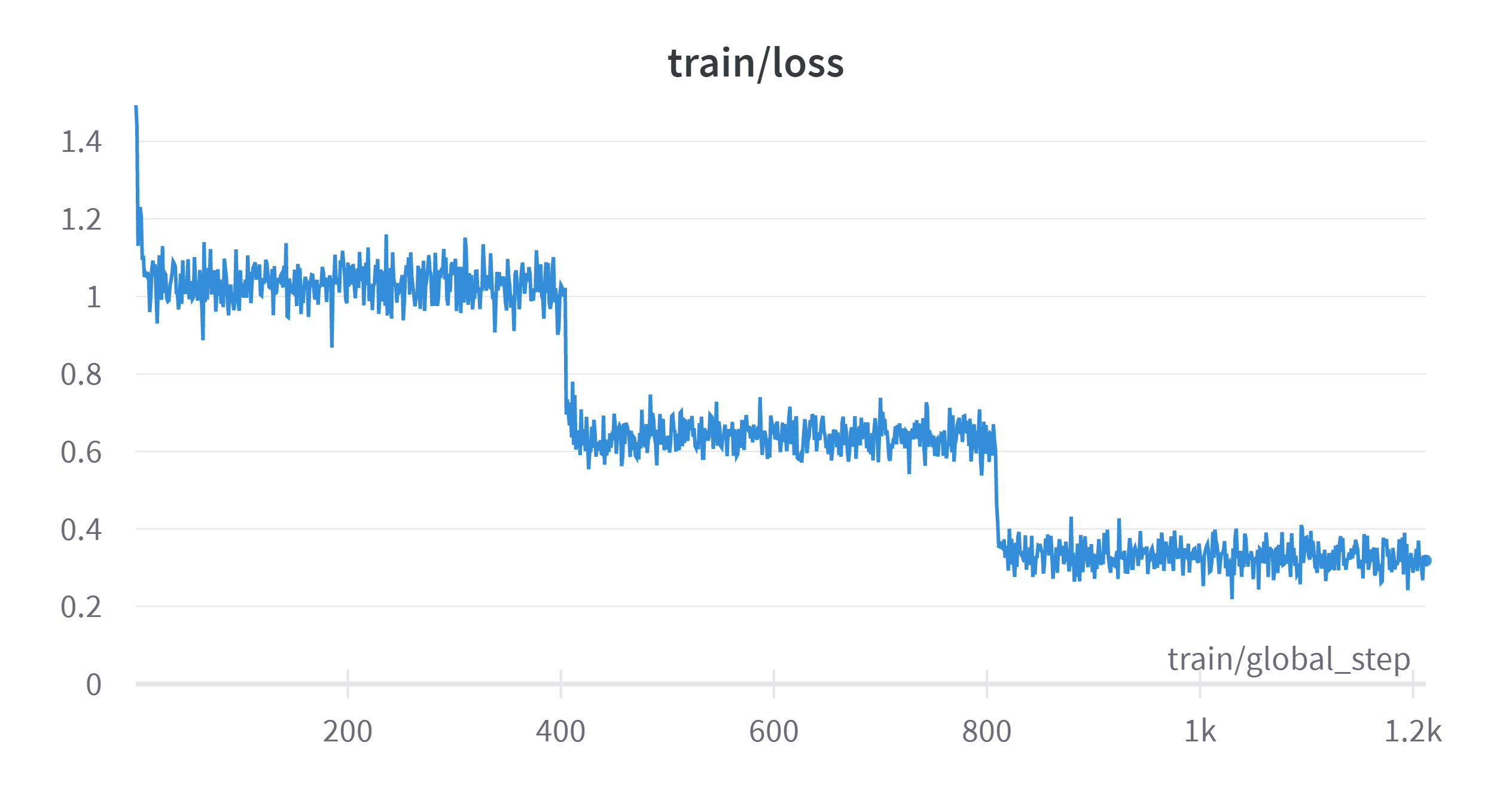

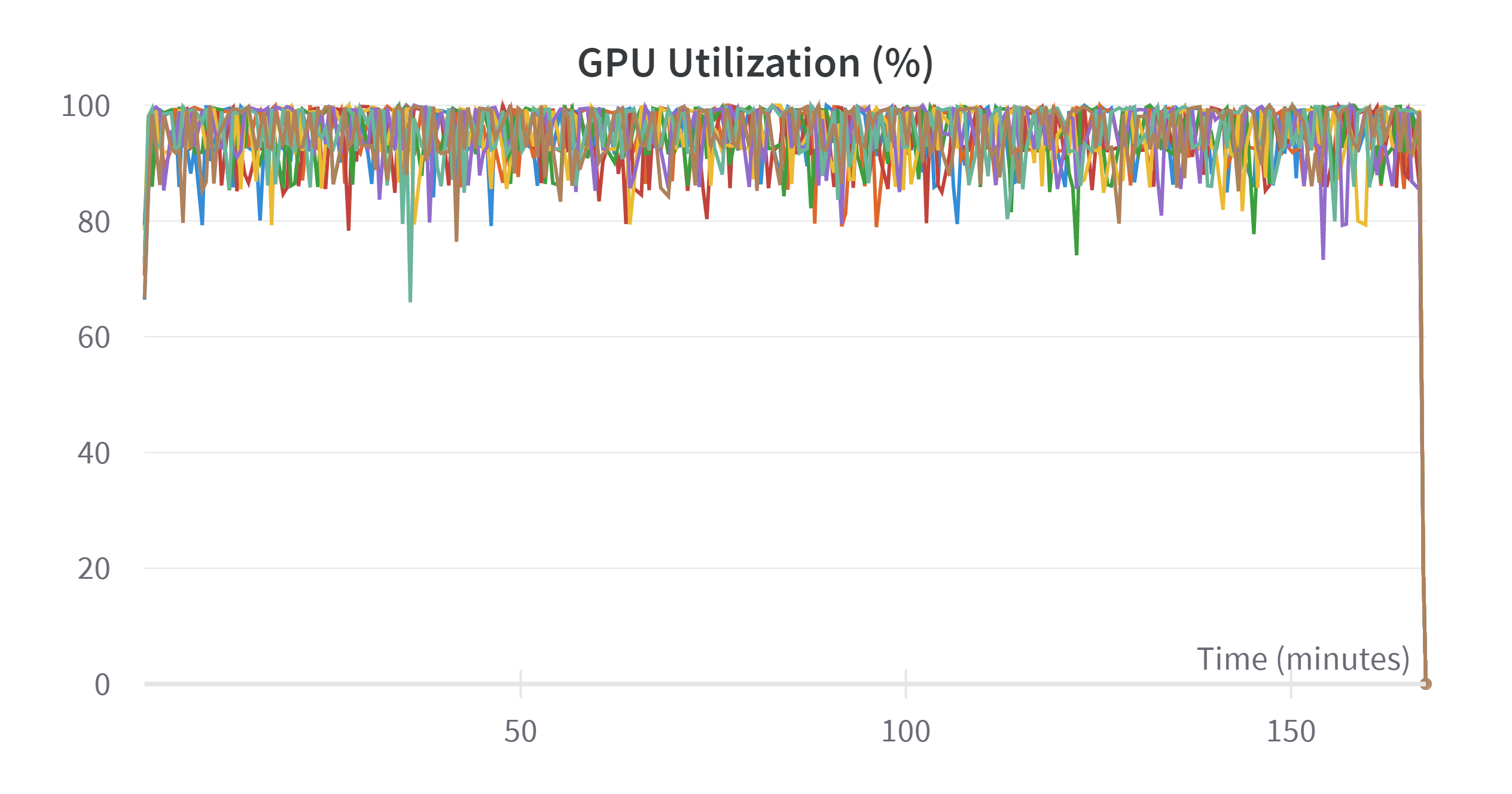

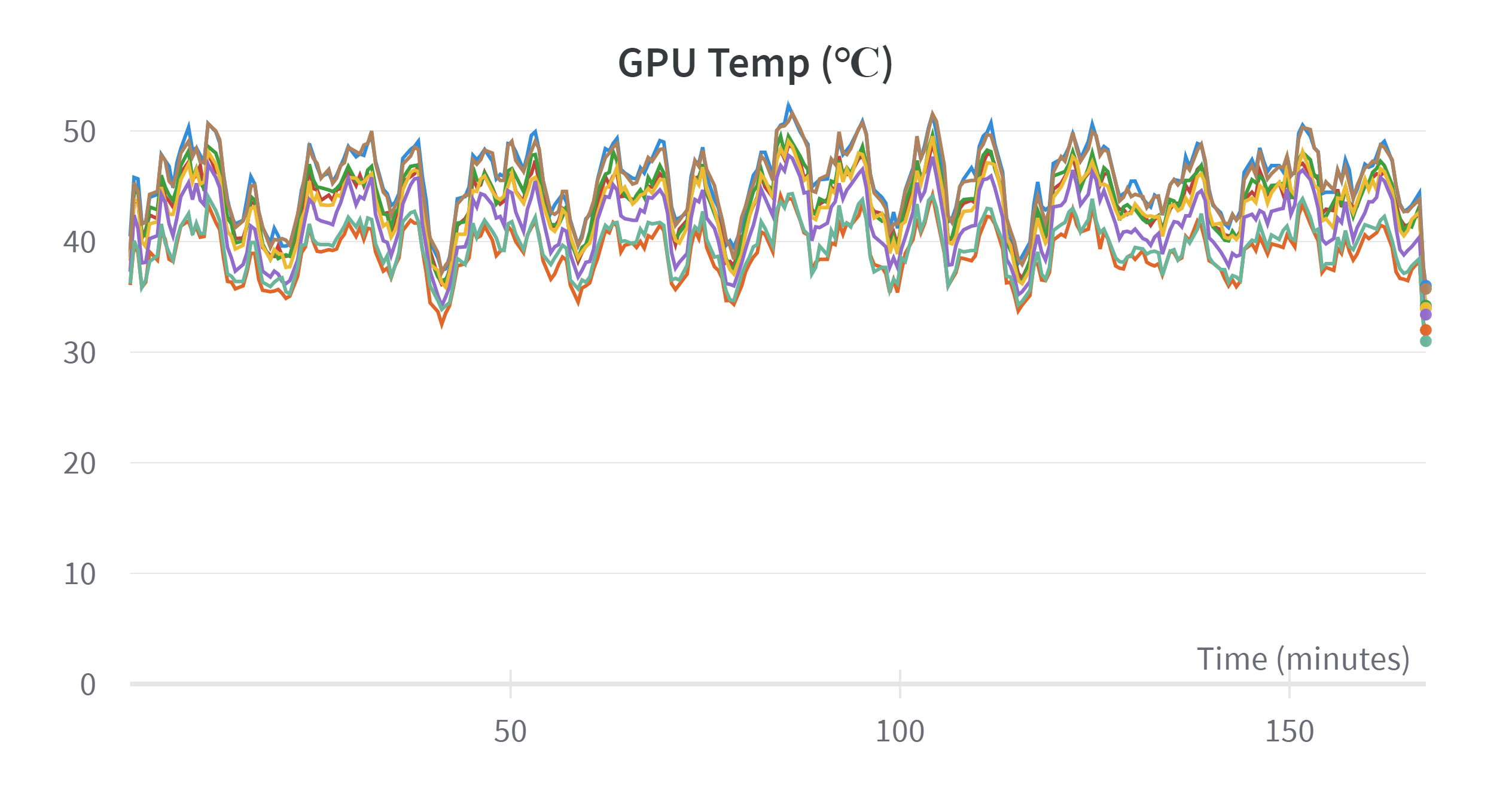

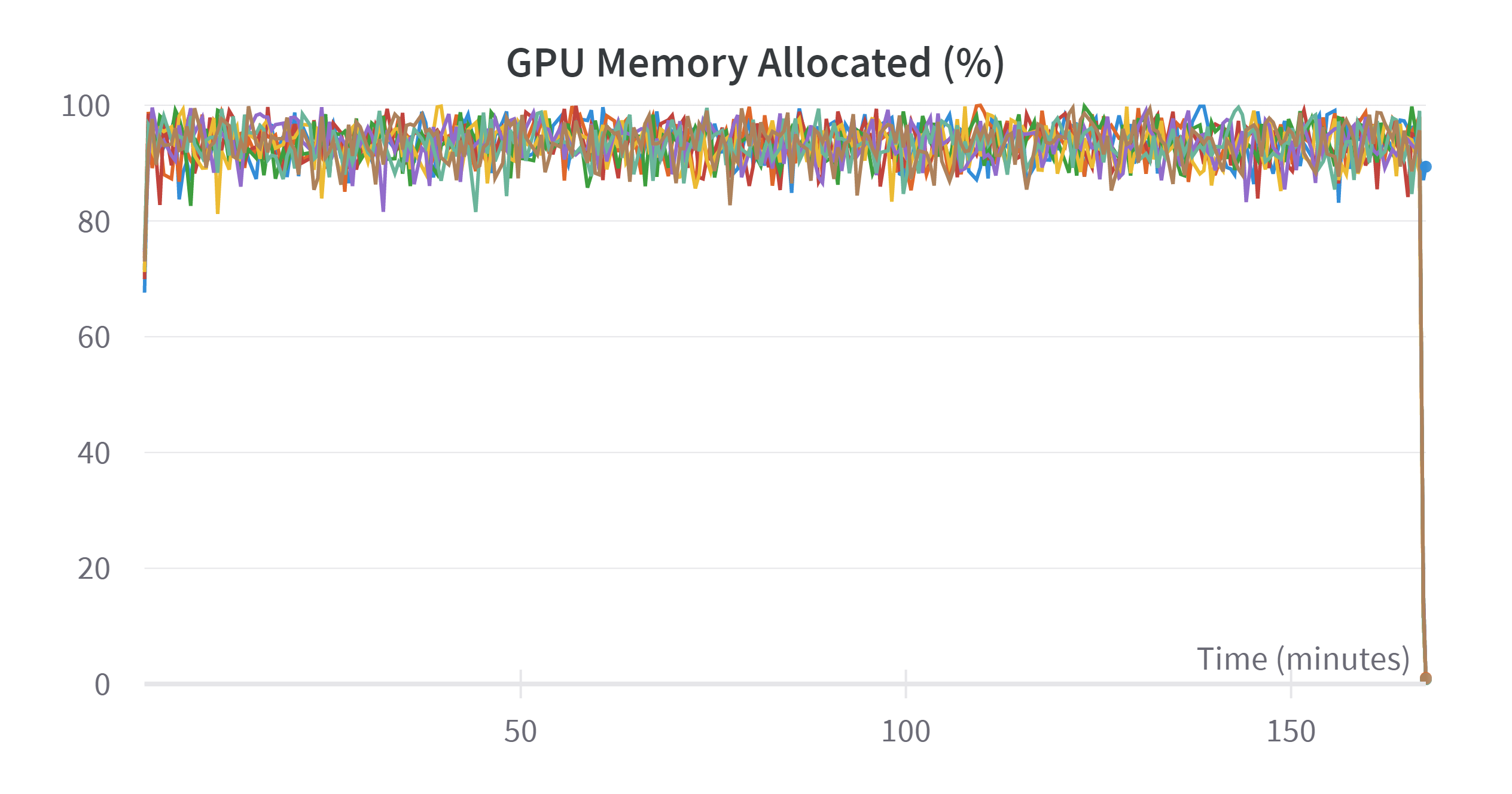

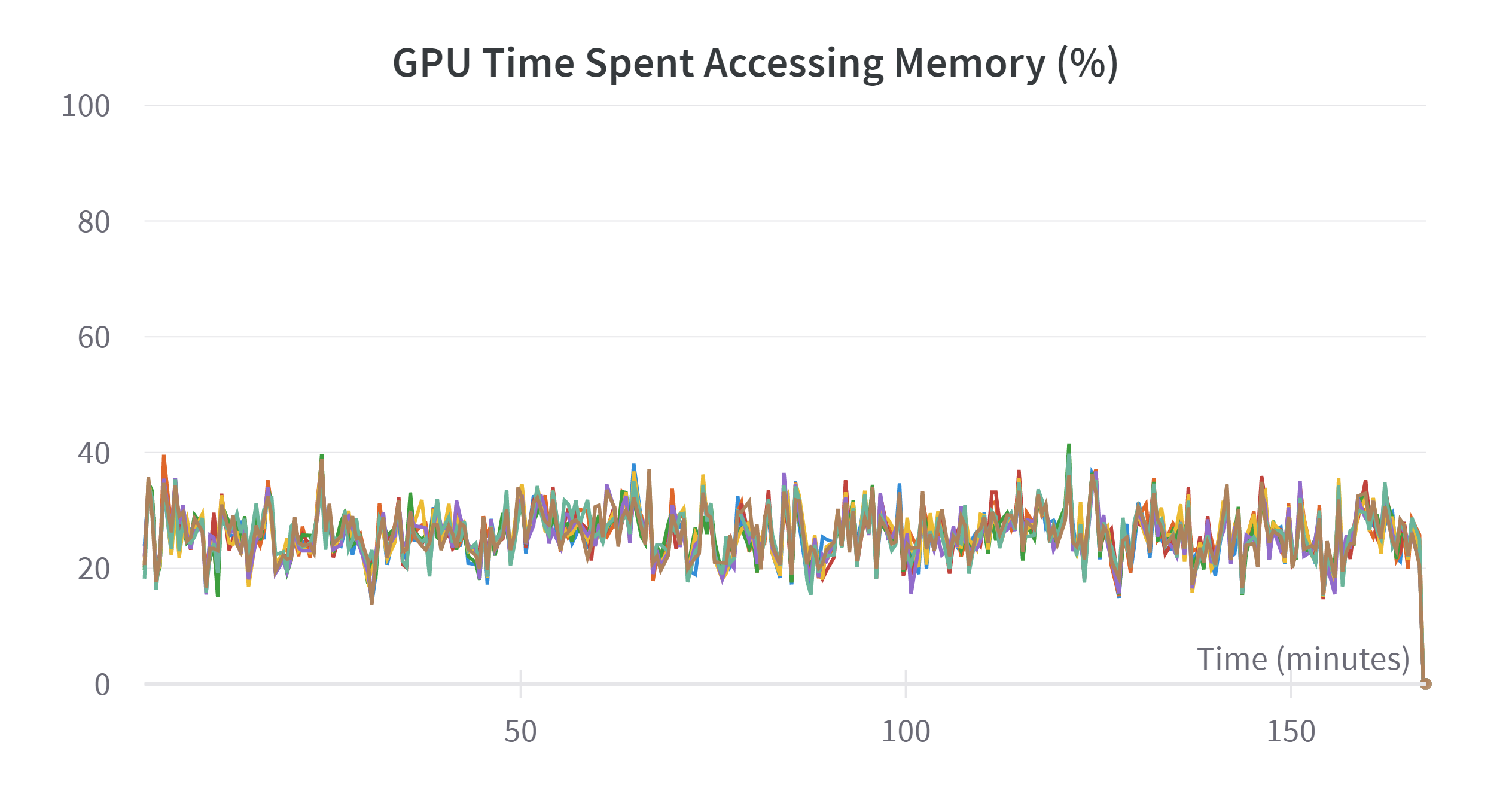

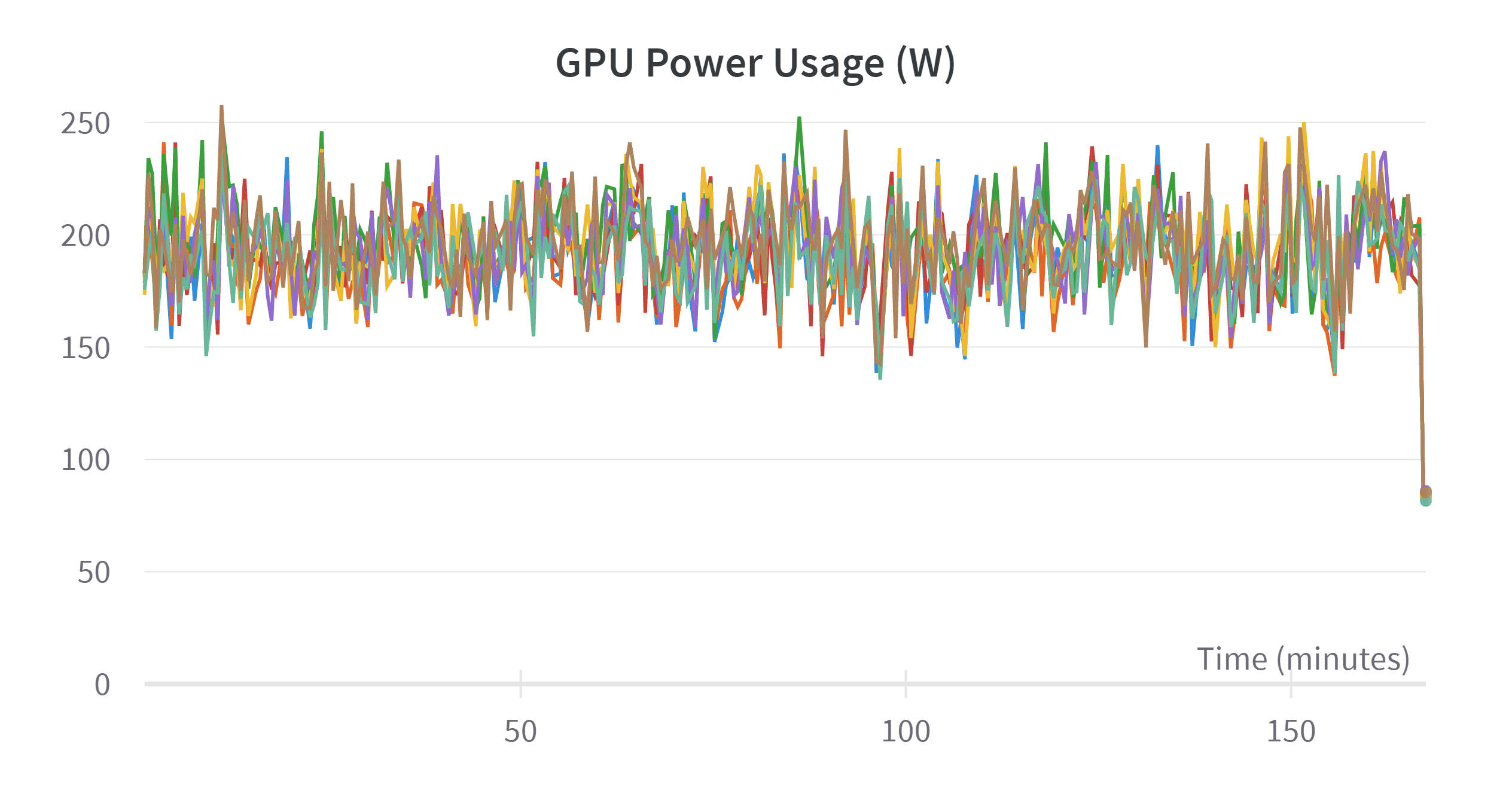

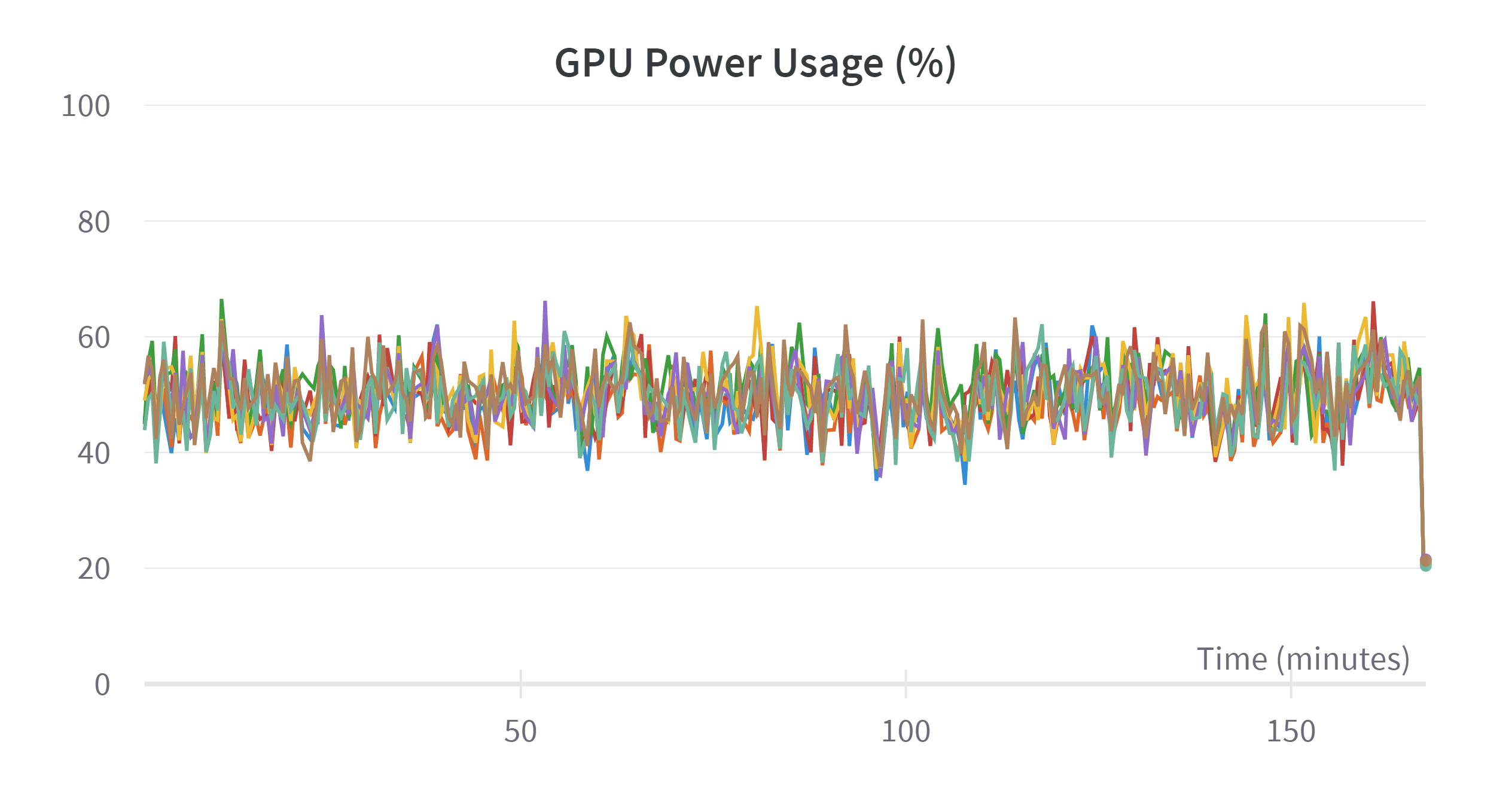

It only took 40 min on 8*NVIDIA A800-SXM4-80GB to finish training. I also trained the 13B model and it took 2h 43m 14s. The training processes are attached to the Appendix section.

Deploying

No context inferencing

We first deploy the code with the same prompt as training shown below.

utit = "### Instruction:"

btit = "### Response:"

def generate_prompt(instruction):

if g.load_context:

return "\n" + utit + f"""

{instruction}

""" + btit + "\n"

else:

return f"Below is an instruction that describes a task. Write a response that appropriately completes the request." + "\n\n" + utit + f"""

{instruction}

""" + btit + "\n"

Disable the load_context inference (no conversation) , with the first user input shown below, this is what the model sees:

Below is an instruction that describes a task. Write a response that appropriately completes the request.

### Instruction:

How to sort a list in Python?

### Response:

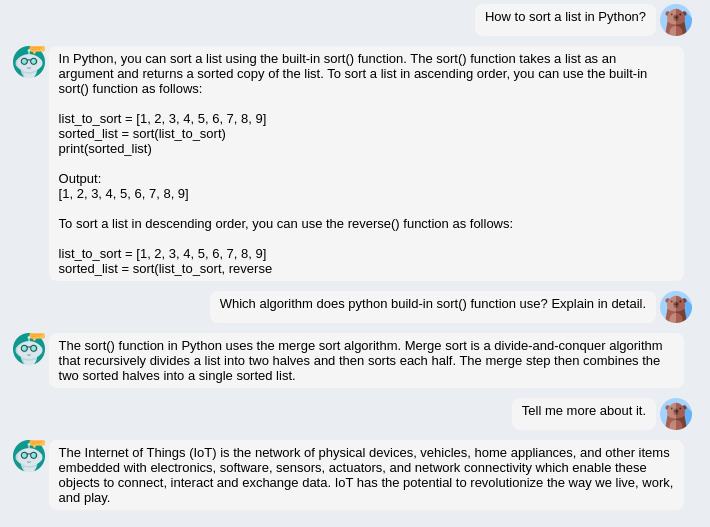

Below is the raw result (only exclude the user prompt before its real answer, no truncation or other preprocessing) The performance looks pretty good actually.

Note that the second round, the AI responses my question perfectly because I give it enough information in this single message. But for the 3rd instruction, I ask, tell me more about it. The model misunderstood the meaning of "it" to IoT because it has no access to the chat history.

Note that the second answer is WRONG. Python uses Timsort algorithm as the default sort algorithm. And TimSort is actually a combination of Merge sort and Insert sort. For the same question with different context, the model first give an answer of Merge sort, then Insert sort in next section.

Inferencing w context

method 1

Now we turn load_context on, mimicking what we did to the original llama code. For example for the second rounds of the conversation below, after the user's message inputed, this is what the model sees:

Below is an instruction that describes a task. Write a response that appropriately completes the request.

### Instruction:

How to sort a list in Python?

### Response:

In Python, you can sort a list using the built-in sort() function. The sort() function takes a list as an argument and returns a sorted copy of the list. To sort a list in ascending order, you can use the built-in sort() function as follows:

list_to_sort = [1, 2, 3, 4, 5, 6, 7, 8, 9]

sorted_list = sort(list_to_sort)

print(sorted_list)

Output:

[1, 2, 3, 4, 5, 6, 7, 8, 9]

To sort a list in descending order, you can use the reverse() function as follows:

list_to_sort = [1, 2, 3, 4, 5, 6, 7, 8, 9]

sorted_list = sort(list_to_sort, reverse

### Instruction:

Which algorithm does this function use? Explain in detail.

### Response:

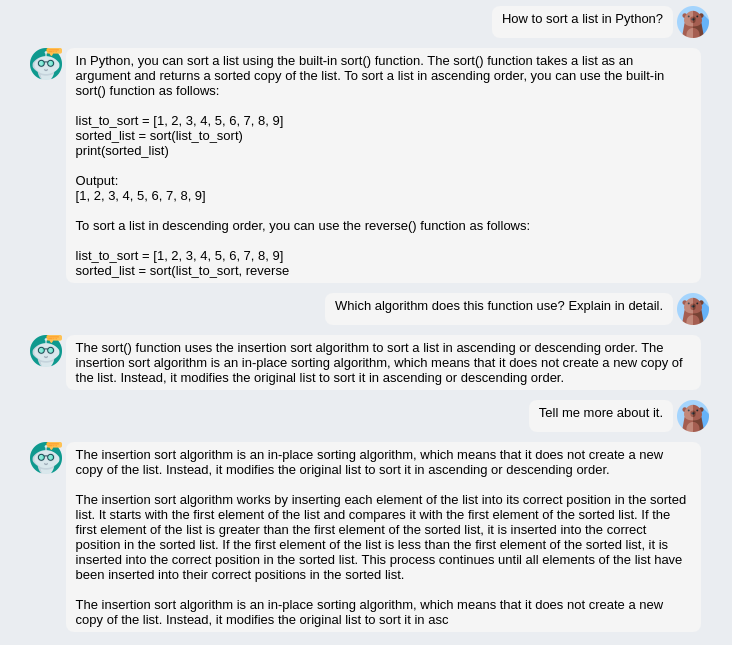

we show the raw answer as before:

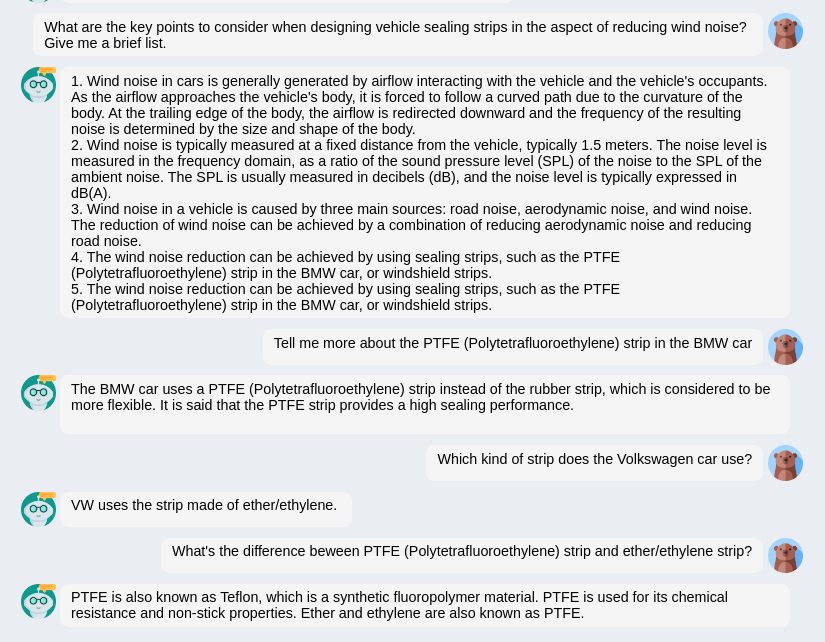

Note the second round looks pretty good, the model know this function means the built-in sort function in Python from the context. But for the 3rd round, the model starts to generate repetitive words. Because this model is not trained with conversation before.

The conservation ability even lower than the llama original with few shot learning.

method 2

A second method (mainsteam) is appending the new message directly to the history as a big ### Instruction. For example for the second round, this is what the model sees:

Below is an instruction that describes a task. Write a response that appropriately completes the request.

### Instruction:

How to sort a list in Python?

In Python, you can sort a list using the built-in sort() function. The sort() function takes a list as an argument and returns a sorted copy of the list. To sort a list in ascending order, you can use the built-in sort() function as follows:

list_to_sort = [1, 2, 3, 4, 5, 6, 7, 8, 9]

sorted_list = sort(list_to_sort)

print(sorted_list)

Output:

[1, 2, 3, 4, 5, 6, 7, 8, 9]

To sort a list in descending order, you can use the reverse() function as follows:

list_to_sort = [1, 2, 3, 4, 5, 6, 7, 8, 9]

sorted_list = sort(list_to_sort, reverse

Which algorithm does this function use? Explain in detail.

### Response:

We can see a similar performance as before. The conversation works until the 3rd round, where the model returns repetitive answers.

Comments on standford alpaca

Compared with the original llama model, after instructional fine tuning, alpaca shows a better performance answering questions

- Far less prompt needed to get a better performance on answering questions.

- Not need to truncate the response as it only tends to answer the instruction.

The negative side is that

- Wrong and misleading answer

- Limited conversation ability as it is not trained for answering conversation. The conservation ability even lower than the llama original with few shot learning.

ON NEXT

Considering this is a very hot topic and there are new repos and trending models coming out everyday.

Next we will try to

- Reproduce the alpaca-lora, which allows alpaca training more efficiently.

- Take a look at other llama extensions, such as

- the Chinese alpaca, model that retrained llama and alpaca-lora with more Chinese dataset

- the GPT4all, an opensource mode based on lamma and alpaca that using GPT4 dataset

Appendix

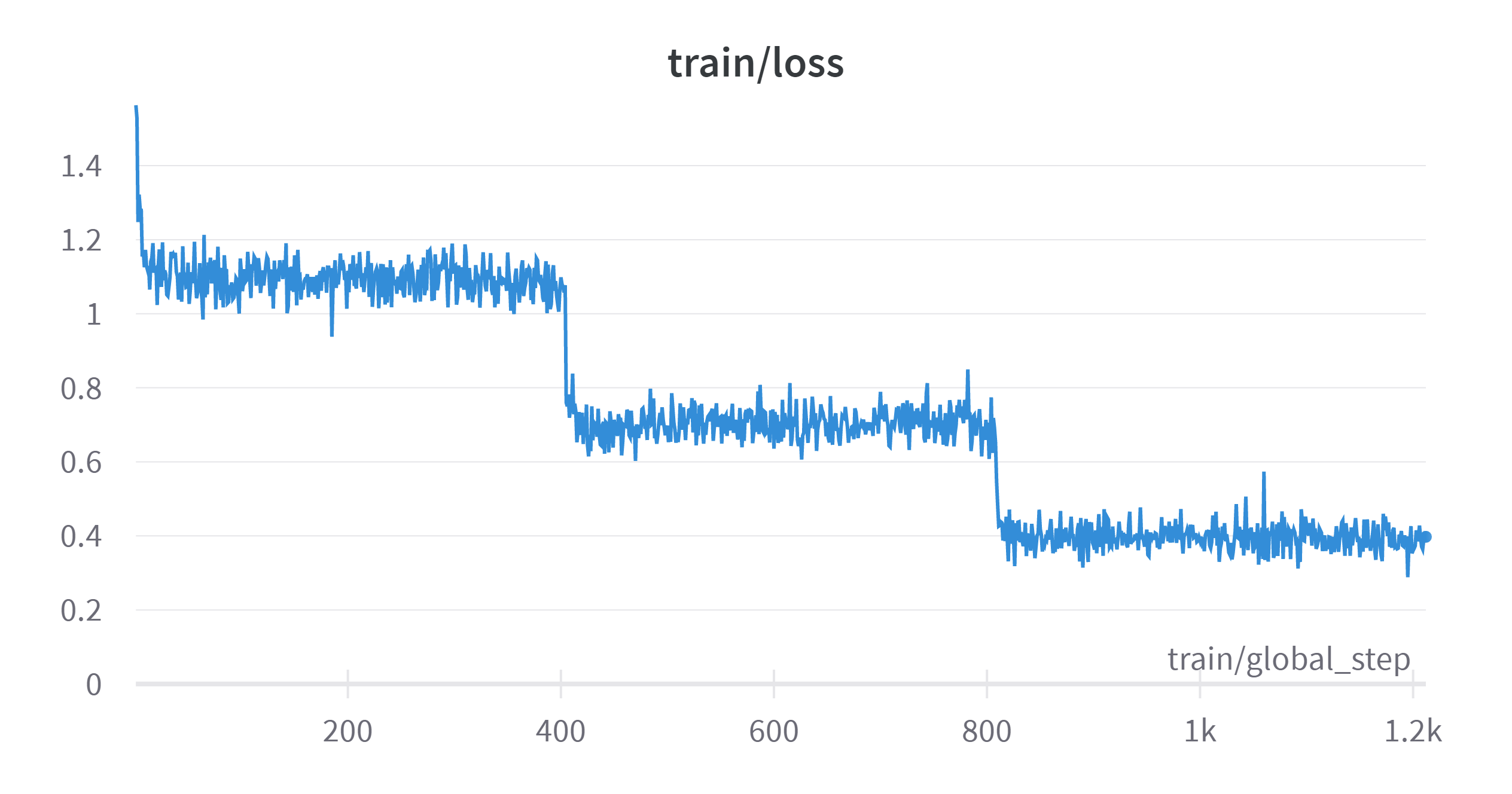

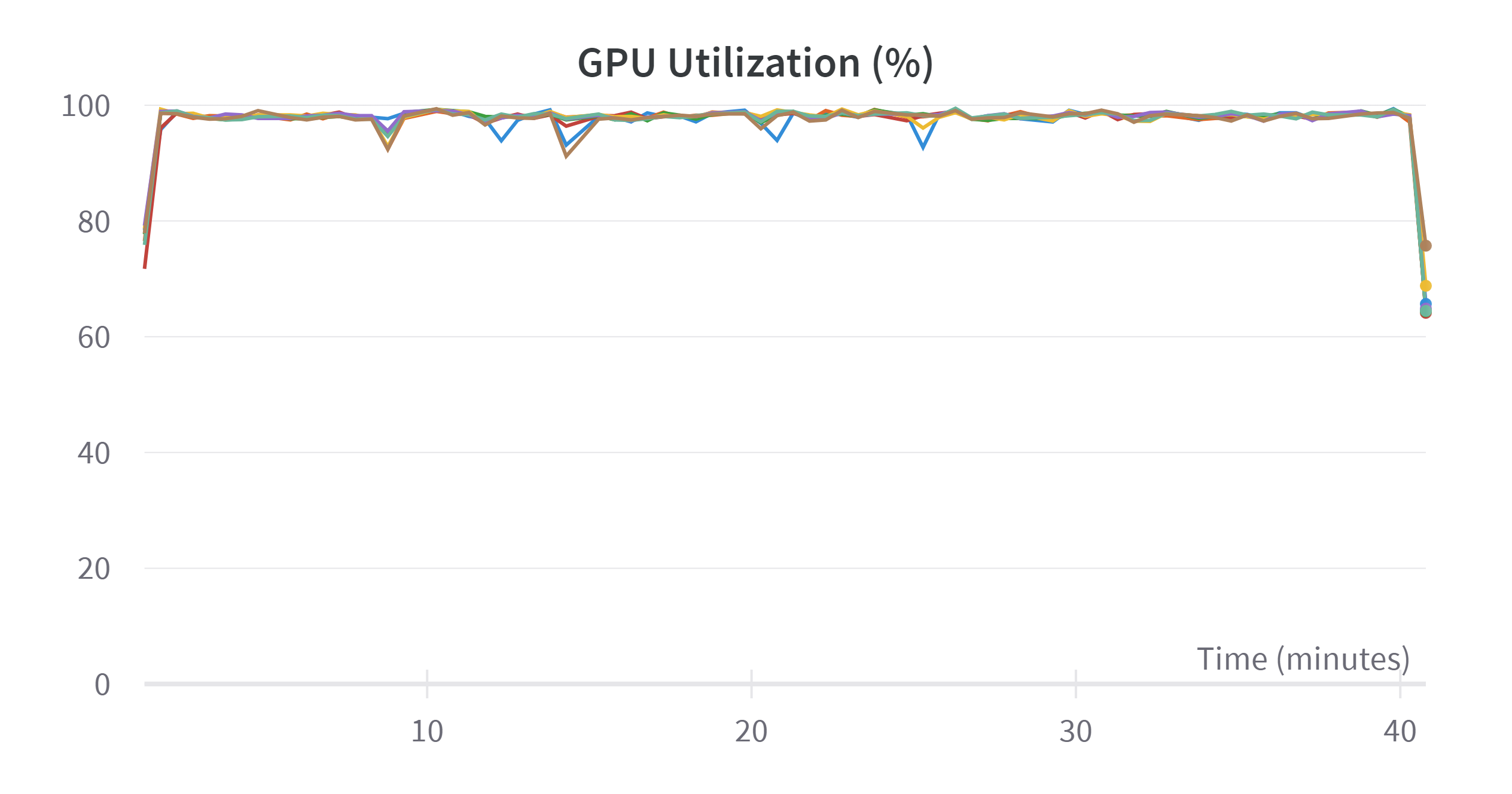

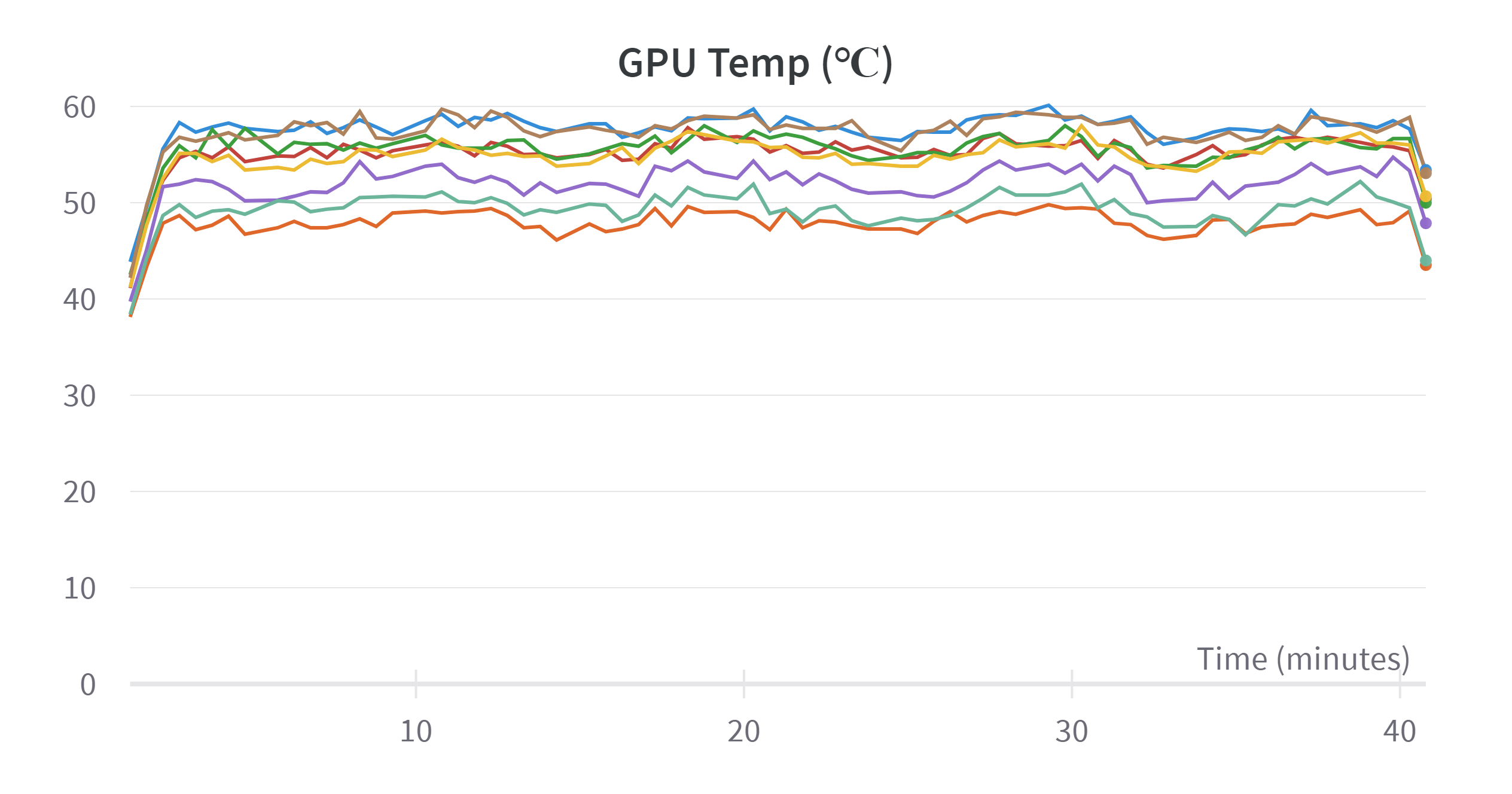

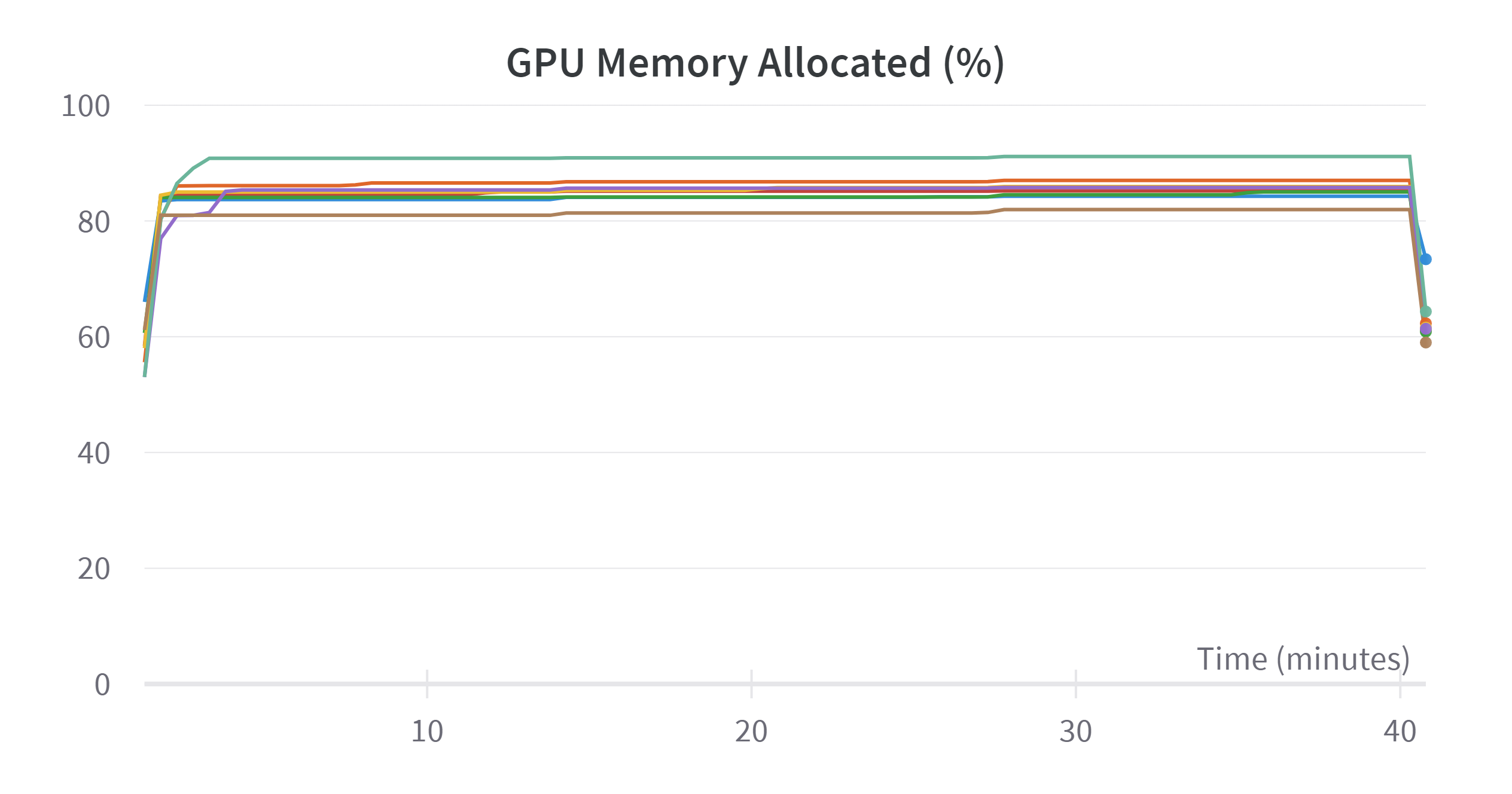

7B training

| Overview | |

|---|---|

| State | finished |

| Start time | April 1st, 2023 at 10:33:31 am |

| Duration | 40m 41s |

| Hostname | localhost.localdomain |

| OS | Linux-3.10.0-957.el7.x86_64-x86_64-with-glibc2.17 |

| Python version | 3.9.12 |

| Python executable | /home/conda/llama/bin/python |

| Command | /home/singleGPU/chatbot/fintune/stanford_alpaca-main/train.py --model_name_or_path ../llama_weights_converted/7B/ --data_path ./alpaca_data_cleaned.json --bf16 True --output_dir ../alpaca_weight_tuned/7B_cleaned --num_train_epochs 3 --per_device_train_batch_size 4 --per_device_eval_batch_size 4 --gradient_accumulation_steps 4 --evaluation_strategy no --save_strategy steps --save_steps 2000 --save_total_limit 1 --learning_rate 2e-5 --weight_decay 0. --warmup_ratio 0.03 --lr_scheduler_type cosine --logging_steps 1 --fsdp "full_shard auto_wrap" --fsdp_transformer_layer_cls_to_wrap LlamaDecoderLayer --tf32 True |

| System Hardware | |

|---|---|

| CPU count | 56 |

| GPU count | 8 |

| GPU type | NVIDIA A800-SXM4-80GB |

| Train logs: | |

|---|---|

| epoch | 3 |

| global_step | 1212 |

| learning_rate | 2e-5 |

| loss | 0.3975 |

| total_flos | 153924206059323400 |

| train_loss | 0.7330102721850077 |

| train_runtime | 2421.2348 |

| train_samples_per_second | 64.074 |

| train_steps_per_second | 0.501 |

| Training process visualization | |

|---|---|

|

|

|

|

|

|

|

|

13B training

| Overview | |

|---|---|

| State | finished |

| Start time | April 1st, 2023 at 11:20:25 am |

| Duration | 2h 47m 20s |

| Hostname | localhost.localdomain |

| OS | Linux-3.10.0-957.el7.x86_64-x86_64-with-glibc2.17 |

| Python version | 3.9.12 |

| Python executable | /home/conda/llama/bin/python |

| Command | /home/singleGPU/chatbot/fintune/stanford_alpaca-main/train.py --model_name_or_path ../llama_weights_converted/13B/ --data_path ./alpaca_data_cleaned.json --bf16 True --output_dir ../alpaca_weight_tuned/13B_cleaned --num_train_epochs 3 --per_device_train_batch_size 2 --per_device_eval_batch_size 2 --gradient_accumulation_steps 8 --evaluation_strategy no --save_strategy steps --save_steps 2000 --save_total_limit 1 --learning_rate 2e-5 --weight_decay 0. --warmup_ratio 0.03 --lr_scheduler_type cosine --logging_steps 1 --fsdp "full_shard auto_wrap" --fsdp_transformer_layer_cls_to_wrap LlamaDecoderLayer --tf32 True |

| System Hardware | |

|---|---|

| CPU count | 56 |

| GPU count | 8 |

| GPU type | NVIDIA A800-SXM4-80GB |

| Train logs: | |

|---|---|

| epoch | 3 |

| global_step | 1212 |

| learning_rate | 2e-5 |

| loss | 0.3184 |

| total_flos | 242450863476441100 |

| train_loss | 0.6685134294648768 |

| train_runtime | 9978.7829 |

| train_samples_per_second | 15.547 |

| train_steps_per_second | 0.121 |

| Training process visualization | |

|---|---|

|

|

|

|

|

|

|

|

References

LangChain document: LangChain 0.0.128

Alpaca: tatsu-lab/stanford_alpaca

Alpaca data cleaned: yahma/alpaca-cleaned

Self instruct: yizhongw/self-instruct

Hugging face Transformer: huggingface/transformers

Hugging face installation guide

Hugging face llama pull request LLaMA Implementation #21955

- Few-shot learning in practice: GPT-Neo and the 🤗 Accelerated Inference API ↩︎

- Example of Prompts ↩︎

- Alpaca: A Strong, Replicable Instruction-Following Model ↩︎

- Wang, Y., Kordi, Y., Mishra, S., Liu, A., Smith, N. A., Khashabi, D., & Hajishirzi, H. (2022). Self-Instruct: Aligning Language Model with Self Generated Instructions. arXiv preprint arXiv:2212.10560. ↩︎